Book: The TCP/IP guide by Charles M. Kozierok

Chapter 1. Networking Introduction, Characteristics, and Types

A networking protocol defines a set of rules, algorithms, messages, and other mechanisms that enables software and hardware in networked devices to communicate effectively. A protocol usually describes a means for communication between corresponding entities at the same OSI Reference Model layer in two or more devices.

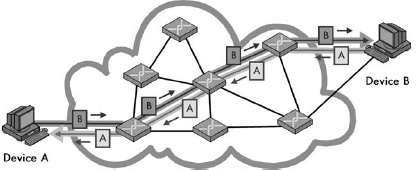

Circuit switching

Figure 1-1. Circuit switching In a circuit-switched network, before communication can occur between two devices, a circuit is established between them. This is shown as a darker line for the conduit of data from Device A to Device B, and a matching lighter line from B back to A. Once it’s set up, all communication between these devices takes place over this circuit, even though there are other possible ways that data could conceivably be passed over the network of devices between them. Contrast this diagram to Figure 1-2.

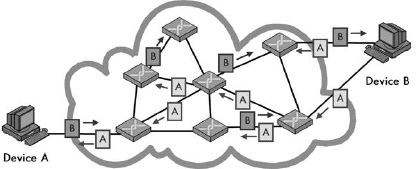

Packet switching

Figure 1-2. Packet switching In a packet-switched network, no circuit is set up prior to sending data between devices. Blocks of data, even from the same file or communication, may take any number of paths as they journey from one device to another. Compare this to Figure 1-1.

One way that networking technologies are categorized is based on the path used to carry data between devices. In circuit switching, a circuit is first established and then used to carry all data between devices. In packet switching, no fixed path is created between devices that communicate; it is broken into packets, each of which may take a separate path from sender to recipient.

Connection-Oriented and Connectionless Protocols

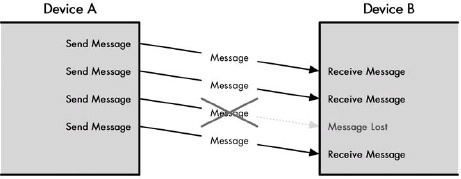

A connection-oriented protocol is one in which a logical connection is first established between devices prior to data being sent. In a connectionless protocol, data is just sent without a connection being created.

Circuit-switched networking technologies are inherently connection-oriented, but not all connection-oriented technologies use circuit switching. Logical connection-oriented protocols can be implemented on top of packet-switching networks to provide higher-layer services to applications that require connections.

Messages: Packets, Frames, Datagrams, and Cells

Communication between devices on packet-switched networks is based on items most generically called messages. These pieces of information also go by other names such as packets, datagrams, frames, and cells, which often correspond to protocols at particular layers of the OSI Reference Model. The formal OSI terms for messages are protocol data unit (PDU) and service data unit (SDU).

Message Formatting: Headers, Payloads, and Footers

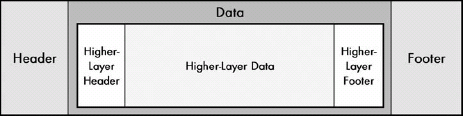

Figure 1-3. Network message formatting In the most general of terms, a message consists of a data payload that will be communicated, bracketed by a set of header and footer fields. The data of any particular message sent in a networking protocol will itself contain an encapsulated higher-layer message containing a header, data, and a footer. This “nesting” can occur many times as data is passed down a protocol stack. The header is found in most protocol messages; the footer only in some.

Figure 1-3. Network message formatting In the most general of terms, a message consists of a data payload that will be communicated, bracketed by a set of header and footer fields. The data of any particular message sent in a networking protocol will itself contain an encapsulated higher-layer message containing a header, data, and a footer. This “nesting” can occur many times as data is passed down a protocol stack. The header is found in most protocol messages; the footer only in some.

The general format of a networking message consists of a header, followed by the data or payload of the message, followed optionally by a footer. Header and footer information is functionally the same except for its position in the message; footer fields are only sometimes used, especially in cases where the data in the field is calculated based on the values of the data being transmitted.

Message Addressing and Transmission Methods: Unicast, Broadcast, and Multicast

Figure 1-4. Unicast, multicast, and broadcast message addressing and transmission The three basic types of addressing and message delivery in networking are illustrated in this simplified LAN. Device 6 is sending a unicast message to Device 2, shown as the dark, heavy arrow. Device 4 is sending a multicast message to multicast group X, shown as the medium-weight arrows. In this case, that group includes Devices 1 and 3, which are highlighted. Finally, Device 5 is sending a broadcast message, which goes to all other devices on the LAN, shown as the thin, faint arrows.

Figure 1-4. Unicast, multicast, and broadcast message addressing and transmission The three basic types of addressing and message delivery in networking are illustrated in this simplified LAN. Device 6 is sending a unicast message to Device 2, shown as the dark, heavy arrow. Device 4 is sending a multicast message to multicast group X, shown as the medium-weight arrows. In this case, that group includes Devices 1 and 3, which are highlighted. Finally, Device 5 is sending a broadcast message, which goes to all other devices on the LAN, shown as the thin, faint arrows.

Three basic methods are used to address and transmit data between networked devices. A unicast transmission goes from one device to exactly one other; this is the most common method used for most message transactions. A broadcast transmission is sent from one device to all connected devices on a network. A multicast transmission is addressed and sent to a select group of devices.

Network Structural Models and Client-Server and Peer-to-Peer Networking

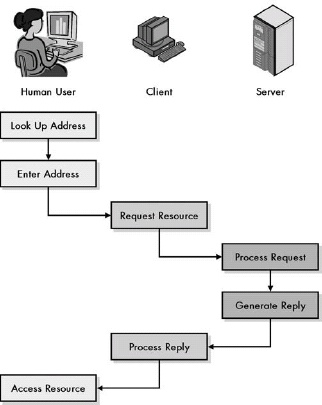

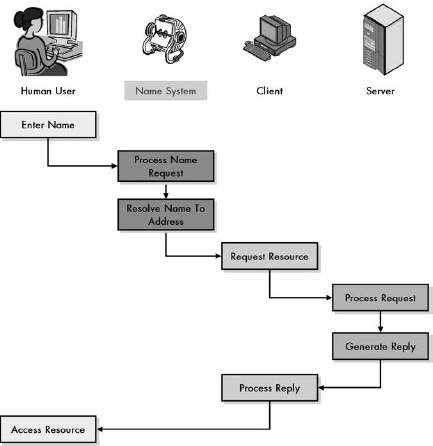

Networks are usually configured to share resources using one of two basic structural models. In a peer-to-peer network, each device is an equal, and none are assigned particular jobs. In a client-server network, however, devices are assigned particular roles—a small number of powerful computers are set up as servers and respond to requests from the other devices, which are clients. Client-server computing also refers to the interaction between complementary protocol elements and software programs. It’s rising in popularity due to its prevalence in TCP/IP and Internet applications.

Figure 1-5. Peer-to-peer networking In this model, each device on the network is treated as a peer, or equal. Each device can send requests and responses, and none are specifically designated as performing a particular role. This model is more often used in very small networks. Contrast this with Figure 1-6.

Figure 1-5. Peer-to-peer networking In this model, each device on the network is treated as a peer, or equal. Each device can send requests and responses, and none are specifically designated as performing a particular role. This model is more often used in very small networks. Contrast this with Figure 1-6.

Figure 1-6. Client-server networking In the client-server model, a small number of devices are designated as servers and equipped with special hardware and software that allows them to interact efficiently and simultaneously with multiple client machines. Though the clients can still interact with each other, most of the time they send requests of various sorts to the server, and the server sends back responses to them. Contrast this with the peer-to-peer networking example in Figure 1-5.

Figure 1-6. Client-server networking In the client-server model, a small number of devices are designated as servers and equipped with special hardware and software that allows them to interact efficiently and simultaneously with multiple client machines. Though the clients can still interact with each other, most of the time they send requests of various sorts to the server, and the server sends back responses to them. Contrast this with the peer-to-peer networking example in Figure 1-5.

Types and Sizes of Networks

Networks are often divided by size and general communication method into three classes. Local area networks (LANs) generally connect proximate devices, usually using cables. Wireless LANs (WLANs) are like cabled LANs but use radio frequency or light technology to connect devices without wires. Wide area networks (WANs) connect distant devices or LANs to each other. Campus area networks (CANs) and metropolitan area networks (MANs) fall between LANs and WANs in terms of overall size. Personal area networks (PANs) are like very small LANs and often appear as wireless PANs (WPANs).

Segments, Networks, Subnetworks, and Internetworks

Several terms are often used to describe the relative sizes of networks and parts of networks. The most basic term is network itself, which can refer to most anything, but often means a set of devices connected using an OSI layer 2 technology. A subnetwork is a part of a network (or internetwork), as is a segment, though the latter often has a more specific meaning in certain technologies. An internetwork refers either generically to a very large network, or specifically, to a set of layer 2 networks connected using routers at layer 3.

The Internet, Intranets, and Extranets

The generic noun internet is a short form for the word internetwork, while the proper noun Internet refers to the global internetwork of TCP/IP networks that we all know and use. The term intranet refers to an internal network that uses TCP/IP technologies as the Internet does. An extranet is like an intranet that is extended to individuals or organizations outside the company. All these terms can be used ambiguously, so you must take care to determine exactly what they mean in any given context.

Chapter 5. GENERAL OSI REFERENCE MODEL ISSUES AND CONCEPTS

History of the OSI Reference Model

The Open Systems Interconnection Reference Model (OSI Reference Model or OSI model) was originally created as the basis for designing a universal set of protocols called the OSI protocol suite. This suite never achieved widespread success, but the model became a very useful tool for both education and development. The model defines a set of layers and a number of concepts for their use that make understanding networks easier.

General Reference Model Issues

The Benefits of Networking Models

Networking models such as the OSI Reference Model provide a framework for breaking down complex internetworks into components that can more easily be understood and utilized. The model defines networking functions not as a large, complicated whole, but as a set of layered, modular components, each of which is responsible for a particular function. The result is better comprehension of network operations, improved performance and functionality, easier design and development, and the ability to combine different components in a way that’s best suited to the needs of the network.

Why Understanding the OSI Reference Model Is Important to You

While many people scoff at the notion of studying the OSI Reference Model, understanding it is very helpful in making sense of networking protocols and technologies. The model is theoretical, but its concepts are employed regularly to describe the operation of real-world networks.

How to Use the OSI Reference Model

It is just as much a mistake to assign too much importance to the OSI Reference Model as too little. While the model defines a framework for understanding networks, not all networking components, protocols, and technologies will necessarily fall into the model’s strict layering architecture. There are cases in which trying to use the model to describe certain concepts can lead to less clarity rather than more. You should remember that the OSI model is a tool and should be used accordingly.

Key OSI Reference Model Concepts

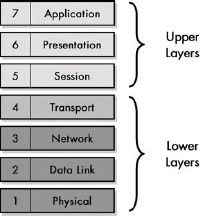

OSI Reference Model Networking Layers, Sublayers, and Layer Groupings

The most fundamental concept in the OSI Reference Model is the division of networking functions into a set of layers, from layer 1 at the bottom to layer 7 at the top. As you go up the layer stack, you move away from concrete, hardware-specific functions to ones that are increasingly abstract, until you reach the realm of user applications at layer 7. The seven layers are sometimes divided into groupings: the lower layers (1 through 3) and the upper layers (4 through 7). There is some disagreement on whether layer 4 is a lower or upper layer.

Figure 5-1. OSI Reference Model layers The OSI Reference Model divides networking functions into a stack of seven layers, numbered 1 through 7 from the bottom up, and sometimes divided into two layer groupings—the lower layers and the upper layers.

Figure 5-1. OSI Reference Model layers The OSI Reference Model divides networking functions into a stack of seven layers, numbered 1 through 7 from the bottom up, and sometimes divided into two layer groupings—the lower layers and the upper layers.

The four lower layers of the OSI model are most often discussed individually, because the boundaries between them are reasonably clear-cut. In contrast, the lines between the session, presentation, and application layers are somewhat blurry. As a result, sometimes protocols span two or even all three of these layers; this is especially true of TCP/IP application protocols, since the TCP/IP model treats layers 5 through 7 as a single layer.

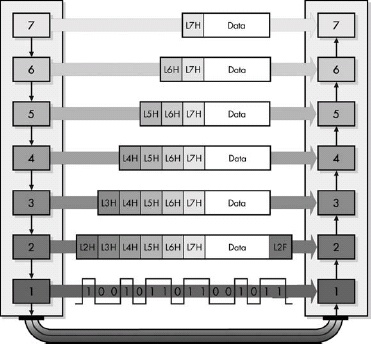

Data Encapsulation, Protocol Data Units (PDUs), and Service Data Units (SDUs)

Figure 5-5. OSI Reference Model data encapsulation Each protocol creates a protocol data unit (PDU) for transmission, each of which includes headers required by that protocol and data to be transmitted. This data becomes the service data unit (SDU) of the next layer below it.

Figure 5-5. OSI Reference Model data encapsulation Each protocol creates a protocol data unit (PDU) for transmission, each of which includes headers required by that protocol and data to be transmitted. This data becomes the service data unit (SDU) of the next layer below it.

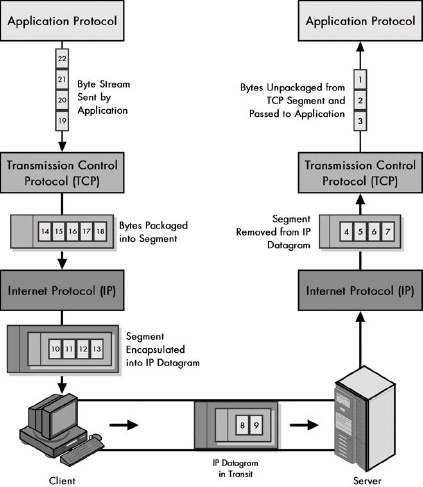

The message used to communicate information for a particular protocol is called its protocol data unit (PDU) in OSI model terminology. That PDU is passed down to the next lower layer for transmission; since that layer is providing the service of handling that PDU, it is called the lower layer’s service data unit (SDU). The SDU is encapsulated into that layer’s own PDU and, in turn, sent to the next lower layer in the stack, proceeding until the physical layer is reached. The process is reversed on the recipient device. In summary, a layer N PDU is a layer N-1 SDU, which is encapsulated into a layer N-1 PDU.

Chapter 6. OSI REFERENCE MODEL LAYERS

Physical Layer (Layer 1)

The lowest layer in the OSI Reference Model is the physical layer. It is the realm of networking hardware specifications, and is the place where technologies that perform data encoding, signaling, transmission, and reception functions reside. The physical layer is closely related to the data link layer.

Data Link Layer (Layer 2)

The second OSI Reference Model layer is the data link layer. This is where most LAN and wireless LAN technologies are defined. Layer 2 is responsible for logical link control (LLC), media access control (MAC), hardware addressing, error detection and handling, and defining physical layer standards. It is often divided into the LLC and MAC sublayers based on the IEEE 802 Project that uses that architecture.

Network Layer (Layer 3)

The OSI Reference Model’s third layer is the network layer. This is one of the most important layers in the model; it is responsible for the tasks that link together individual networks into internetworks. Network layer functions include internetwork-level addressing, routing, datagram encapsulation, fragmentation and reassembly, and certain types of error handling and diagnostics. The network layer and transport layer are closely related to each other.

Transport Layer (Layer 4)

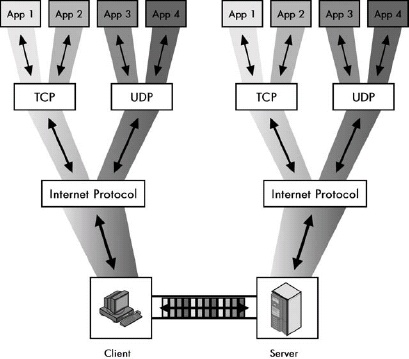

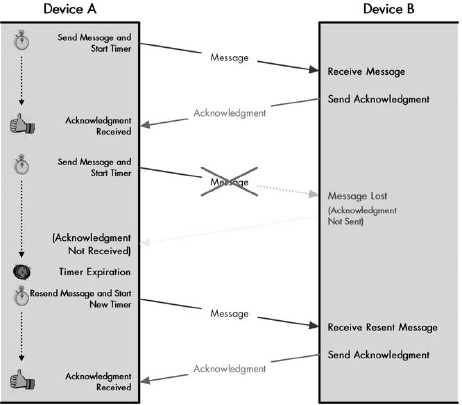

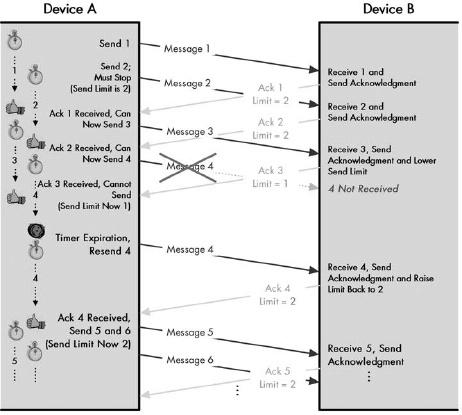

The fourth and middle OSI Reference Model layer is the transport layer. This layer represents the transition point between the lower layers that deal with data delivery issues, and the higher ones that work with application software. The transport layer is responsible for enabling end-to-end communication between application processes, which it accomplishes in part through the use of process-level addressing and multiplexing or demultiplexing. Transport layer protocols are responsible for segmenting application data into blocks for transmission and may be either connection-oriented or connectionless. Protocols at this layer also often provide data delivery management services such as reliability and flow control.

Session Layer (Layer 5)

The fifth layer in the OSI Reference Model layer is the session layer. As its name suggests, it is the layer intended to provide functions for establishing and managing sessions between software processes. Session layer technologies are often implemented as sets of software tools called application program interfaces (APIs), which provide a consistent set of services that allow programmers to develop networking applications without needing to worry about lower-level details of transport, addressing, and delivery.

Presentation Layer (Layer 6)

The sixth OSI model layer is the presentation layer. Protocols at this layer take care of manipulation tasks that transform data from one representation to another, such as translation, compression, and encryption. In many cases, no such functions are required in a particular networking stack; if so, there may not be any protocol active at layer 6, so layer 7 may deal with layer 5.

Application Layer (Layer 7)

The application layer is the seventh and highest layer in the OSI Reference Model. Application protocols that implement specific user applications and other high-level functions are defined at this layer. Since they are at the top of the stack, application protocols are the only ones that do not provide services to a higher layer; they use services provided by the layers below.

Chapter 8. TCP/IP PROTOCOL SUITE AND ARCHITECTURE

TCP/IP History and Development

TCP/IP was initially developed in the 1970s as part of an effort to define a set of technologies to operate the fledgling Internet. The name TCP/IP came about when the original Transmission Control Program (TCP) was split into the Transmission Control Protocol (TCP) and Internet Protocol (IP). The first modern versions of these two key protocols were documented in 1980 as TCP version 4 and IP version 4, respectively.

Important Factors in the Success of TCP/IP

While TCP/IP is not the only internetworking protocol suite, it is definitely the most important one. Its unparalleled success is due to a wide variety of factors. These include its technical features, such as its routing-friendly design and scalability, its historical role as the protocol suite of the Internet, and its open standards and development process, which reduce barriers to acceptance of TCP/IP protocols.

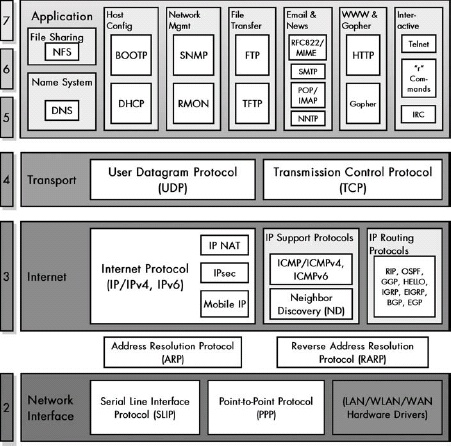

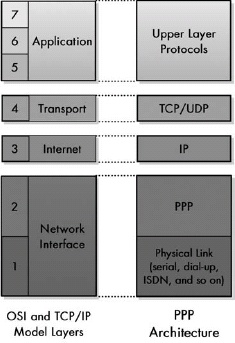

TCP/IP Architecture and the TCP/IP Model

The architecture of the TCP/IP protocol suite is often described in terms of a layered reference model called the TCP/IP model, DARPA model, or DoD model. The TCP/IP model includes four layers: the network interface layer (responsible for interfacing the suite to the physical hardware on which it runs), the Internet layer (where device addressing, basic datagram communication, and routing take place), the host-to-host transport layer (where connections are managed and reliable communication is ensured), and the application layer (where end-user applications and services reside). The first three layers correspond to layers 2 through 4 of the OSI Reference Model respectively; the application layer is equivalent to OSI layers 5 to 7.

TCP/IP Protocols

Figure 8-3. TCP/IP protocols This diagram shows all the TCP/IP protocols covered in this book, arranged by TCP/IP and OSI Reference Model layer (with the exception of the administration utilities).

Figure 8-3. TCP/IP protocols This diagram shows all the TCP/IP protocols covered in this book, arranged by TCP/IP and OSI Reference Model layer (with the exception of the administration utilities).

Chapter 9. TCP/IP SERIAL LINE INTERNET PROTOCOL (SLIP) AND POINT-TO-POINT PROTOCOL (PPP) OVERVIEW AND FUNDAMENTALS

SLIP versus PPP

SLIP and PPP provide layer 2 connectivity for TCP/IP implementations that run directly over a physical layer link without a layer 2 technology. While SLIP is simpler, PPP is favored due to its many features and capabilities.

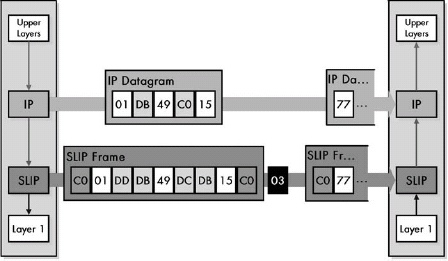

Serial Line Internet Protocol (SLIP)

SLIP provides a layer 2 framing service for IP datagrams but no other features or capabilities.

SLIP was designated nonstandard because it was developed as a very rudimentary, stopgap measure to provide layer 2 framing when needed. SLIP is so simple that there really isn’t much to standardize. Too, it has so many deficiencies that the Internet Engineering Task Force (IETF) apparently didn’t want to formalize it as a standard. RFC 1055 specifically mentions various problems with SLIP (as I’ll discuss later in this chapter) and the fact that work was already under way to define PPP as a more capable successor to SLIP.

SLIP Data Framing Method and General Operation

SLIP does the following:

- Breaks an IP datagram into bytes

- Sends the END character (value 192) after the last byte of the datagram; in better implementations, it sends the END character before the first byte as well

- Replaces any byte to be sent in the datagram that is 192 with 219 220

- Replaces any byte to be sent that is 219 with 219 221

Figure 9-1. Operation of the Serial Line Internet Protocol (SLIP) SLIP’s only function is to frame data from layer 3 (usually IP datagrams) by surrounding them with END characters and replacing special characters as needed.

Figure 9-1. Operation of the Serial Line Internet Protocol (SLIP) SLIP’s only function is to frame data from layer 3 (usually IP datagrams) by surrounding them with END characters and replacing special characters as needed.

Problems and Limitations of SLIP

- SLIP’s maximum supported datagram size is not standardized and depends on each implementation.

- SLIP doesn’t provide any way of detecting or correcting errors in transmissions.

- SLIP offers no way for the two devices to communicate control information that may be required to manage the link.

- Since SLIP includes no headers of its own, it is not possible to identify that SLIP is being used.

- Devices do need some way of learning each other’s IP addresses for routing at layer 3. SLIP provides no method for this.

- SLIP provides no compression features.

- SLIP lacks even basic security features, with no means for authenticating connections or encrypting data.

Point-to-Point Protocol (PPP) Overview and Fundamentals

Figure 9-2. PPP location in the TCP/IP architecture PPP is the interface between the IP and a physical link such as a serial line or dial-up networking connection. This corresponds to layer 2 in the OSI Reference Model.

Figure 9-2. PPP location in the TCP/IP architecture PPP is the interface between the IP and a physical link such as a serial line or dial-up networking connection. This corresponds to layer 2 in the OSI Reference Model.

PPP is a complete link layer protocol suite for devices using TCP/IP. It provides framing, encapsulation, authentication, quality monitoring, and other features that enable robust operation of TCP/IP over a variety of physical layer connections.

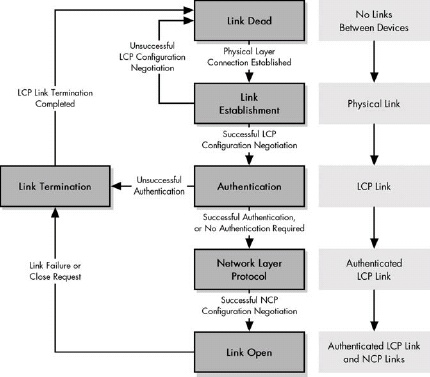

General Operation

- Link Setup and Configuration: Before the two devices can exchange information, they must make contact and set up a link between them. During link setup, the devices agree on all the parameters needed to manage the operation of the link. LCP begins this process and invokes the help of support protocols as needed, for options like authentication. Once the link is set up, in order to complete link setup, the appropriate NCP is called for whatever layer 3 technology is being carried on the link.

- Link Operation: The devices use the link to send datagrams. Each device transmits by encapsulating layer 3 datagrams and sending them down to layer 1 to be transmitted. Each device receives by taking PPP frames sent up from its own physical layer, stripping off the PPP header and passing the datagram up to layer 3. Where appropriate, optional protocols are used at this stage to offer features such as compression (CCP).

- Link Termination: When either device decides that it no longer wants to communicate, it terminates the link.

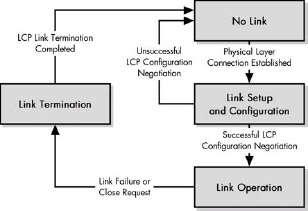

Figure 9-3. Overview of PPP operation In simplest terms, PPP consists of only three basic steps: link setup, link operation, and link termination.

Figure 9-3. Overview of PPP operation In simplest terms, PPP consists of only three basic steps: link setup, link operation, and link termination.

PPP Link Setup and Phases

Figure 9-4. PPP phases A PPP connection follows a mainly linear sequence of transitions from the Link Dead Phase through the Link Open Phase.

Figure 9-4. PPP phases A PPP connection follows a mainly linear sequence of transitions from the Link Dead Phase through the Link Open Phase.

Chapter 13. ADDRESS RESOLUTION AND THE TCP/IP ADDRESS RESOLUTION PROTOCOL (ARP)

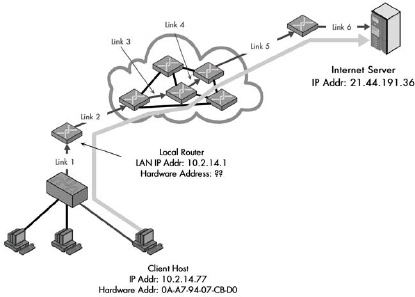

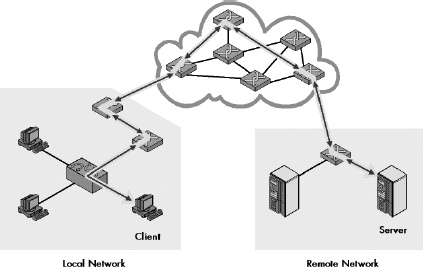

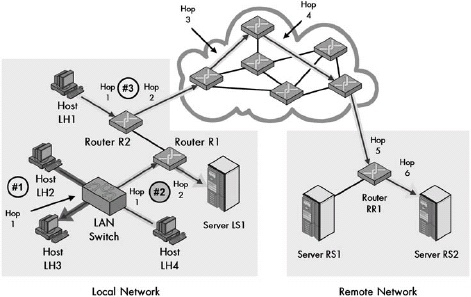

Figure 13-1. Why address resolution is necessary Even though conceptually the client and server are directly connected at layer 3, in reality, information passing between them goes over multiple layer 2 links. In this example, a client on the local network is accessing a server somewhere on the Internet. Logically, this connection can be made directly between the client and server, but in reality, it is a sequence of physical links at layer 2. In this case, there are six such links, most of them between routers that lie between the client and server. At each step, the decision of where to send the data is made based on a layer 3 address, but the actual transmission must be performed using the layer 2 address of the next intended recipient in the route.

Figure 13-1. Why address resolution is necessary Even though conceptually the client and server are directly connected at layer 3, in reality, information passing between them goes over multiple layer 2 links. In this example, a client on the local network is accessing a server somewhere on the Internet. Logically, this connection can be made directly between the client and server, but in reality, it is a sequence of physical links at layer 2. In this case, there are six such links, most of them between routers that lie between the client and server. At each step, the decision of where to send the data is made based on a layer 3 address, but the actual transmission must be performed using the layer 2 address of the next intended recipient in the route.

Address resolution is required because internetworked devices communicate logically using layer 3 addresses, but the actual transmissions between devices take place using layer 2 (hardware) addresses.

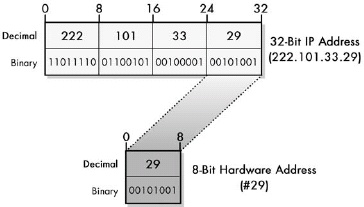

Address Resolution Through Direct Mapping

Figure 13-2. Address resolution through direct mapping With a small hardware address size, you can easily map each hardware address to a layer 3 address. As you can see in this figure, when the hardware address is small, it is easy to define a mapping that directly corresponds to a portion of a layer 3 address. In this example, an 8-bit MAC address, such as the one used for ARCNet, is mapped to the last byte of the device’s IP address, thereby making address resolution a trivial matter.

Figure 13-2. Address resolution through direct mapping With a small hardware address size, you can easily map each hardware address to a layer 3 address. As you can see in this figure, when the hardware address is small, it is easy to define a mapping that directly corresponds to a portion of a layer 3 address. In this example, an 8-bit MAC address, such as the one used for ARCNet, is mapped to the last byte of the device’s IP address, thereby making address resolution a trivial matter.

When the layer 2 address is smaller than the layer 3 address, it is possible to define a direct mapping between them so that the hardware address can be determined directly from the network layer address. This makes address resolution extremely simple, but reduces flexibility in how addresses are assigned.

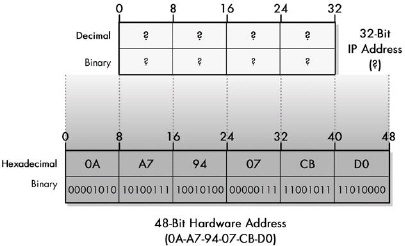

Figure 13-3. Address resolution problems with large hardware address size Direct mapping is impossible when the layer 2 address is larger in size than the layer 3 address.

Figure 13-3. Address resolution problems with large hardware address size Direct mapping is impossible when the layer 2 address is larger in size than the layer 3 address.

Dynamic Address Resolution

Figure 13-4. Dynamic address resolution The device that wants to send data broadcasts a request asking for a response with a hardware address from the other device. Device A needs to send data to Device B, but knows only its IP address (IPB) and not its hardware address. Device A broadcasts a request asking to be sent the hardware address of the device using the IP address IPB. Device B responds back to Device A directly with the hardware address.

Figure 13-4. Dynamic address resolution The device that wants to send data broadcasts a request asking for a response with a hardware address from the other device. Device A needs to send data to Device B, but knows only its IP address (IPB) and not its hardware address. Device A broadcasts a request asking to be sent the hardware address of the device using the IP address IPB. Device B responds back to Device A directly with the hardware address.

Dynamic address resolution is usually implemented using a special protocol. A device that knows only the network layer address of another device can use this protocol to request the other device’s hardware address.

TCP/IP Address Resolution Protocol (ARP)

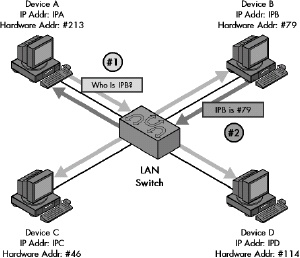

ARP was developed to facilitate dynamic address resolution between IP and Ethernet and can now be used on other layer 2 technologies as well. It works by allowing an IP device to send a broadcast on the local network, and it requests a response with a hardware address from another device on the same local network.

ARP General Operation

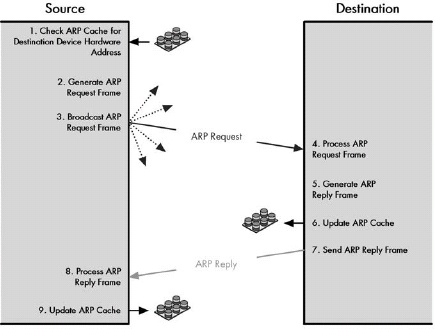

Figure 13-5. Address Resolution Protocol (ARP) transaction process ARP works by having the source device broadcast a request to find the destination, which responds using a reply message. ARP caches are also consulted and updated as needed.

Figure 13-5. Address Resolution Protocol (ARP) transaction process ARP works by having the source device broadcast a request to find the destination, which responds using a reply message. ARP caches are also consulted and updated as needed.

ARP is a relatively simple request-and-reply protocol. The source device broadcasts an ARP Request that’s looking for a particular device based on the device’s IP address. That device responds with its hardware address in an ARP Reply message.

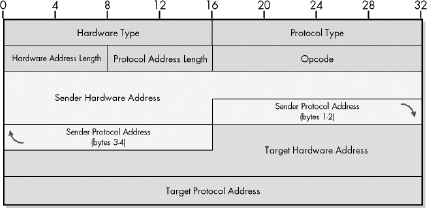

ARP Message

ARP Message Format

Figure 13-6. ARP message format

Figure 13-6. ARP message format

| Field Name | Size (Bytes) | Description |

|---|---|---|

| HRD | 2 | Hardware Type: This field specifies the type of hardware used for the local network transmitting the ARP message; thus, it also identifies the type of addressing used. Some of the most common values for this field are shown in Table 13-2. |

| PRO | 2 | Protocol Type: This field is the complement of the Hardware Type field, specifying the type of layer 3 addresses used in the message. For IPv4 addresses, this value is 2048 (0800 hex), which corresponds to the EtherType code for IP. |

| HLN | 1 | Hardware Address Length: Specifies how long hardware addresses are in this message. For Ethernet or other networks using IEEE 802 MAC addresses, the value is 6. |

| PLN | 1 | Protocol Address Length: Again, the complement of the preceding field; specifies how long protocol (layer 3) addresses are in this message. For IPv4 addresses, this value is 4. |

| OP | 2 | Opcode: This field specifies the nature of the ARP message being sent. The first two values (1 and 2) are used for regular ARP. Numerous other values are also defined to support other protocols that use the ARP frame format, such as RARP, as shown in Table 13-3. Some protocols are more widely used than others. |

| SHA | Variable, equals value in HLN field | Sender Hardware Address: The hardware (layer 2) address of the device sending this message, which is the IP datagram source device on a request, and the IP datagram destination on a reply. |

| SPA | Variable, equals value in PLN field | Sender Protocol Address: The IP address of the device sending this message. |

| THA | Variable, equals value in HLN field | Target Hardware Address: The hardware (layer 2) address of the device this message is being sent to. This is the IP datagram destination device on a request, and the IP datagram source on a reply. |

| TPA | Variable, equals value in PLN field | Target Protocol Address: The IP address of the device this message is being sent to. |

ARP Hard Type(HRD) Field Values

|Hardware Type (HRD) Value |Hardware Type | |:— |:— | |1 |Ethernet (10 Mb) | |6 |IEEE 802 Networks | |7 |ARCNeT | |15 |Frame Relay | |16 |Asynchronous Transfer Mode (ATM) | |17 |HDLC | |18 |Fibre Channel | |19 |Asynchronous Transfer Mode (ATM) | |20 |Serial Line |

ARP Opcode (OP) Field Values

|Opcode |ARP Message Type | |:— |:— | |1 |ARP Request | |2 |ARP Reply | |3 |RARP Request | |4 |RARP Reply | |5 |DRARP Request | |6 |DRARP Reply | |7 |DRARP Error | |8 |InARP Request | |9 |InARP Reply |

Proxy ARP

Since ARP relies on broadcasts for address resolution, and broadcasts are not propagated beyond a physical network, ARP cannot function between devices on different physical networks. When such operation is required, a device, such as a router, can be configured as an ARP proxy to respond to ARP requests on the behalf of a device on a different network.

Figure 13-7. ARP Proxy operation These two examples show how a router acting as an ARP proxy returns its own hardware address in response to requests by one device for an address on the other network. In this small internetwork shown, a single router connects two LANs that are on the same IP network or subnet. The router will not pass ARP broadcasts, but has been configured to act as an ARP proxy. In this example, Device A and Device D are each trying to send an IP datagram to the other, and so each broadcasts an ARP Request. The router responds to the request sent by Device A as if it were Device D, giving to Device A its own hardware address (without propagating Device A’s broadcast). It will forward the message sent by Device A to Device D on Device D’s network. Similarly, it responds to Device D as if it were Device A, giving its own address, then forwarding what Device D sends to it over to the network where Device A is located.

Figure 13-7. ARP Proxy operation These two examples show how a router acting as an ARP proxy returns its own hardware address in response to requests by one device for an address on the other network. In this small internetwork shown, a single router connects two LANs that are on the same IP network or subnet. The router will not pass ARP broadcasts, but has been configured to act as an ARP proxy. In this example, Device A and Device D are each trying to send an IP datagram to the other, and so each broadcasts an ARP Request. The router responds to the request sent by Device A as if it were Device D, giving to Device A its own hardware address (without propagating Device A’s broadcast). It will forward the message sent by Device A to Device D on Device D’s network. Similarly, it responds to Device D as if it were Device A, giving its own address, then forwarding what Device D sends to it over to the network where Device A is located.

TCP/IP Address Resolution for IP Multicast Addresses

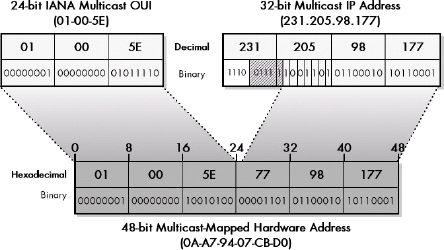

IP multicast addresses are resolved to IEEE 802 (Ethernet) MAC addresses using a direct mapping technique that uses 23 of the 28 bits in the IP multicast group address.

Figure 13-8. Mapping of multicast IP Addresses to IEEE 802 multicast MAC addresses Multicast IP addresses are mapped to IEEE 802 multicast MAC addresses by copying the IANA multicast OUI value (01-00-5E) to the top 24 bits, setting the 25th bit to zero, and copying the bottom 23 bits of the multicast address to the remaining 23 bits. To create a 48-bit multicast IEEE 802 (Ethernet) address, the top 24 bits are filled in with the IANA’s multicast OUI, 01-00-5E. The 25th bit is zero, and the bottom 23 bits of the multicast group are put into the bottom 23 bits of the MAC address. This leaves 5 bits (shown hatched) that are not mapped to the MAC address, meaning that 32 different IP addresses may have the same mapped multicast MAC address.

Figure 13-8. Mapping of multicast IP Addresses to IEEE 802 multicast MAC addresses Multicast IP addresses are mapped to IEEE 802 multicast MAC addresses by copying the IANA multicast OUI value (01-00-5E) to the top 24 bits, setting the 25th bit to zero, and copying the bottom 23 bits of the multicast address to the remaining 23 bits. To create a 48-bit multicast IEEE 802 (Ethernet) address, the top 24 bits are filled in with the IANA’s multicast OUI, 01-00-5E. The 25th bit is zero, and the bottom 23 bits of the multicast group are put into the bottom 23 bits of the MAC address. This leaves 5 bits (shown hatched) that are not mapped to the MAC address, meaning that 32 different IP addresses may have the same mapped multicast MAC address.

TCP/IP Address Resolution for IP Version 6

Address resolution in IPv6 uses the new Neighbor Discovery (ND) Protocol instead of the Address Resolution Protocol (ARP). A device trying to send an IPv6 datagram sends a Neighbor Solicitation message to get the address of another device, which responds with a Neighbor Advertisement. When possible, to improve efficiency, the request is sent using a special type of multicast address rather than broadcast.

Chapter 14. REVERSE ADDRESS RESOLUTION AND THE TCP/IP REVERSE ADDRESS RESOLUTION PROTOCOL (RARP)

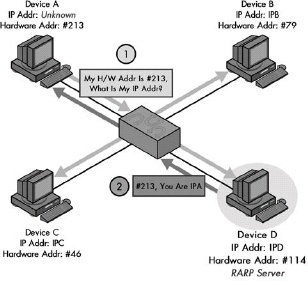

The Reverse Address Resolution Protocol (RARP) is the earliest and simplest protocol that’s designed to allow a device to obtain an IP address for use on a TCP/IP network. It is based directly on ARP and works in basically the same way, but in reverse: A device sends a request containing its hardware address, and a device set up as an RARP server responds back with the device’s assigned IP address

Figure 14-1. Operation of the Reverse Address Resolution Protocol (RARP) RARP works like ARP but in reverse; a device broadcasts its hardware address and an RARP server responds with its IP address. Here, instead of Device A providing the IP address of another device and asking for its hardware address, it is providing its own hardware address and asking for an IP address it can use. The answer, in this case, is provided by Device D, which is serving as an RARP server for this network.

Figure 14-1. Operation of the Reverse Address Resolution Protocol (RARP) RARP works like ARP but in reverse; a device broadcasts its hardware address and an RARP server responds with its IP address. Here, instead of Device A providing the IP address of another device and asking for its hardware address, it is providing its own hardware address and asking for an IP address it can use. The answer, in this case, is provided by Device D, which is serving as an RARP server for this network.

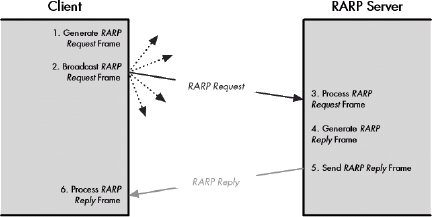

RARP General Operation

Figure 14-2. Reverse Address Resolution Protocol (RARP) operation RARP consists of the exchange of one broadcast request message and one unicast reply message.

Figure 14-2. Reverse Address Resolution Protocol (RARP) operation RARP consists of the exchange of one broadcast request message and one unicast reply message.

The importance of host configuration has increased dramatically since the early 1980s. Many organizations assign IP addresses dynamically even for hosts that have disk storage, because of the many advantages this provides in administration and because of the efficient use of address space. For this reason, RARP has been replaced by two more capable technologies that operate at higher layers in the TCP/IP protocol stack: BOOTP and DHCP.

Chapter 15. INTERNET PROTOCOL VERSIONS, CONCEPTS, AND OVERVIEW

IP Overview and Key Operational Characteristics

Figure 15-1. The main function of IP: internetwork datagram delivery IP’s overall responsibility is to deliver data between devices on unconnected networks. This figure shows how IP delivers datagrams from one device to another over an internetwork; in this case, a distant client and server communicate with each other by passing IP datagrams over a series of interconnected networks.

Figure 15-1. The main function of IP: internetwork datagram delivery IP’s overall responsibility is to deliver data between devices on unconnected networks. This figure shows how IP delivers datagrams from one device to another over an internetwork; in this case, a distant client and server communicate with each other by passing IP datagrams over a series of interconnected networks.

While the Internet Protocol has many functions and characteristics, it can be boiled down to one primary purpose: the delivery of datagrams across an internetwork of connected networks.

IP Versions and Version Numbers

Version 4 of the Internet Protocol (IP) is actually the first version that was widely deployed and is currently the one in widespread use.

Chapter 16. IPV4 ADDRESSING CONCEPTS AND ISSUES

IP Addressing Overview and Fundamentals

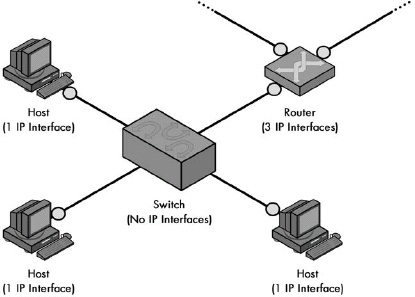

Figure 16-1. IP interfaces for common network devices Regular hosts have one interface; routers usually have more than one; and switches have none (because they operate at layer 2).

Figure 16-1. IP interfaces for common network devices Regular hosts have one interface; routers usually have more than one; and switches have none (because they operate at layer 2).

IP addresses serve the dual function of device identification and routing. Each network interface requires one IP address, which is network specific. IP addresses can be either statically or dynamically allocated, and come in unicast, multicast, and broadcast forms.

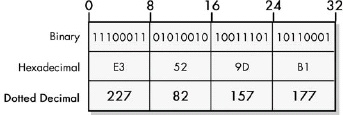

IP Address Size, Address Space, and Notation

IP addresses are 32-bit binary numbers, which can be expressed in binary, hexadecimal, or decimal form. Most commonly, they are expressed by dividing the 32 bits into four bytes and converting each to decimal, then separating these numbers with dots to create dotted decimal notation.

Figure 16-2. IP address binary, hexadecimal, and dotted decimal representations The binary, hexadecimal, and decimal representations of an IP address are all equivalent.

Figure 16-2. IP address binary, hexadecimal, and dotted decimal representations The binary, hexadecimal, and decimal representations of an IP address are all equivalent.

Since IP addresses are 32 bits long, the total address space of IPv4 is 232 or 4,294,967,296 addresses. However, not all of these addresses can be used, for a variety of reasons.

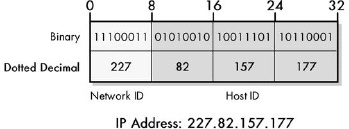

IP Basic Address Structure and Main Components

Figure 16-3. Basic IP address division: network ID and host ID This diagram shows one of the many ways to divide an IP address into a network ID and host ID.

Figure 16-3. Basic IP address division: network ID and host ID This diagram shows one of the many ways to divide an IP address into a network ID and host ID.

Figure 16-4. Mid-octet IP address division IP addresses need not be divided between network ID and host ID on octet boundaries. The division here is into a 20-bit network ID and a 12-bit host ID.

Figure 16-4. Mid-octet IP address division IP addresses need not be divided between network ID and host ID on octet boundaries. The division here is into a 20-bit network ID and a 12-bit host ID.

The basic structure of an IP address consists of two components: the network ID and host ID. The dividing point of the 32-bit address is not fixed, but depends on a number of factors and can occur in a variety of places, including in the middle of a dotted-decimal octet.

IP Addressing Categories and IP Address Adjuncts

An essential factor in determining how an IP address is interpreted is the addressing scheme in which it is used. The three methods, arranged in increasing order of age, complexity, and flexibility, are classful addressing, subnetted classful addressing, and classless addressing.

Number of IP Addresses and Multihoming

A host with more than one IP network interface is said to be multihomed. A multihomed device can have multiple connections to the same network, to different networks, or both. A host connected to two networks can be configured to function as a router.

IP Address Management and Assignment Methods and Authorities

Figure 16-5. Multihomed devices on an IP internetwork This internetwork consists of two LANs, A (above) and B (below). LAN A has a multihomed workstation, shown with two IP network interface “circles.” The two LANs are connected together through a multihomed, shared server that has been configured to route traffic between them. Note that this server also handles all traffic passing between LAN B and the Internet (since the Internet connection is in LAN A only).

Figure 16-5. Multihomed devices on an IP internetwork This internetwork consists of two LANs, A (above) and B (below). LAN A has a multihomed workstation, shown with two IP network interface “circles.” The two LANs are connected together through a multihomed, shared server that has been configured to route traffic between them. Note that this server also handles all traffic passing between LAN B and the Internet (since the Internet connection is in LAN A only).

Chapter 17. CLASSFUL (CONVENTIONAL) ADDRESSING

IP Classful Addressing Overview and Address Classes

IP Address Classes and Class Characteristics and Uses

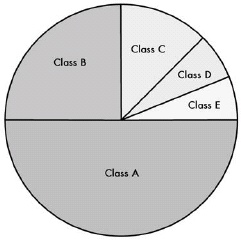

|IP Address Class |Fraction of Total IP Address Space |Number of Network ID Bits |Number of Host ID Bits |Intended Use | |:— |:— |:— |:— |:— | |Class A |1/2 |8 |24 |Unicast addressing for very large organizations with hundreds of thousands or millions of hosts to connect to the Internet | |Class B |1/4 |16 |16 |Unicast addressing for medium to large organizations with many hundreds to thousands of hosts to connect to the Internet | |Class C |1/8 |24 |8 |Unicast addressing for smaller organizations with no more than about 250 hosts to connect to the Internet | |Class D |1/16 |n/a |n/a |IP multicasting | |Class E |1/16 |n/a |n/a |Reserved for experimental use |

Figure 17-1. Division of IPv4 address space into classes

Figure 17-1. Division of IPv4 address space into classes

The classful IP addressing scheme divides the IP address space into five classes, A through E, of differing sizes. Classes A, B, and C are the most important ones, designated for conventional unicast addresses and taking up seven-eighths of the address space. Class D is reserved for IP multicasting, and Class E is reserved for experimental use.

IP Classful Addressing Network and Host Identification and Address Ranges

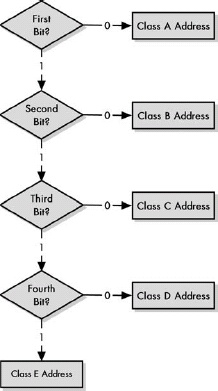

Figure 17-2. Class determination algorithm for classful IP addresses The simplicity of the classful IP addressing can be seen in the very uncomplicated algorithm used to determine the class of an address.

Figure 17-2. Class determination algorithm for classful IP addresses The simplicity of the classful IP addressing can be seen in the very uncomplicated algorithm used to determine the class of an address.

IP Address Class Bit Patterns, First-Octet Ranges, and Address Ranges

|IP Address Class |First Octet of IP Address |Lowest Value of First Octet (Binary) |Highest Value of First Octet (Binary) |Range of First Octet Values (Decimal) |Octets in Network ID/Host ID |Theoretical IP Address Range | |:— |:— |:— |:— |:— |:— |:— | |Class A |0xxx xxxx |0000 0001 |0111 1110 |1 to 126 |1 / 3 |1.0.0.0 to 126.255.255.255 | |Class B |10xx xxxx |1000 0000 |1011 1111 |128 to 191 |2 / 2 |128.0.0.0 to 191.255.255.255 | |Class C |110x xxxx |1100 0000 |1101 1111 |192 to 223 |3 / 1 |192.0.0.0 to 223.255.255.255 | |Class D |1110 xxxx |1110 0000 |1110 1111 |224 to 239 |— |224.0.0.0 to 239.255.255.255 | |Class E |1111 xxxx |1111 0000 |1111 1111 |240 to 255 |— |240.0.0.0 to 255.255.255.255 |

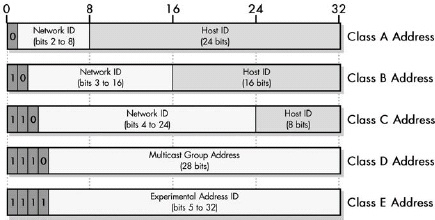

Figure 17-3. IP address class bit assignments and network/host ID sizes This illustration shows how the 32 bits of IP address are assigned for each of the five IP address classes. Classes A, B, and C are the normal classes used for regular unicast addresses; each has a different dividing point between the network ID and host ID. Classes D and E are special and are not divided in this manner.

Figure 17-3. IP address class bit assignments and network/host ID sizes This illustration shows how the 32 bits of IP address are assigned for each of the five IP address classes. Classes A, B, and C are the normal classes used for regular unicast addresses; each has a different dividing point between the network ID and host ID. Classes D and E are special and are not divided in this manner.

In the classful IP addressing scheme, the class of an IP address is identified by looking at the first one, two, three, or four bits of the address. This can be done both by humans working with these addresses and routers making routing decisions. The use of these bit patterns means that IP addresses in different classes fall into particular address ranges that allow an address’s class to be determined by looking at the first byte of its dotted decimal address.

IP Address Class A, B, and C Network and Host Capacities

IP Address Class Network and Host Capacities

|IP Address Class |Total # of Bits for Network ID/Host ID |First Octet of IP Address |# of Network ID Bits Used To Identify Class |Usable # of Network ID Bits |Number of Possible Network IDs |# of Host IDs Per Network ID | |:— |:— |:— |:— |:— |:— |:— | |Class A |8/24 |0xxx xxxx |1 |8-1 = 7 |27-2 = 126 |224-2 = 16,277,214 | |Class B |16/16 |10xx xxxx |2 |16-2 = 14 |214 = 16,384 |216-2 = 65,534 |Class C |24/8 |110x xxxx |3 |24-3 = 21 |221 = 2,097,152 |28-2 = 254 |

In the classful IP addressing scheme, a Class A network contains addresses for about 16 million network interfaces; a Class B network contains about 65,000; and a Class C network contains 254.

IP Addresses with Special Meanings

When the network ID or host ID of an IP address is replaced by a pattern of all ones or all zeros, the result is an address with a special meaning. Examples of such addresses include “all hosts” broadcast addresses and addresses that refer to a specific host or a whole network.

IP Address Patterns with Special Meanings

|Network ID |Host ID |Class A Example |Class B Example |Class C Example |Special Meaning and Description | |:— |:— |:— |:— |:— |:— | |Network ID |Host ID |77.91.215.5 |154.3.99.6 |227.82.157.160 |Normal Meaning: Refers to a specific device. | |Network ID |All Zeros |77.0.0.0 |154.3.0.0 |227.82.157.0 |The Specified Network: This notation, with a 0 at the end of the address, refers to an entire network. | |All Zeros |Host ID |0.91.215.5 |0.0.99.6 |0.0.0.160 |Specified Host on This Network: This addresses a host on the current or default network when the network ID is not known or when it doesn’t need to be explicitly stated. | |All Zeros |All Zeros |0.0.0.0 | | |Me: Used by a device to refer to itself when it doesn’t know its own IP address. (Alternatively, “this host,” or “the current/default host.”) The most common use is when a device attempts to determine its address using a host-configuration protocol like DHCP. May also be used to indicate that any address of a multihomed host may be used. | |Network ID |All Ones |77.255.255.255 |154.3.255.255 |227.82.157.255 |All Hosts on the Specified Network: Used for broadcasting to all hosts on the local network. | |All Ones |All Ones |255.255.255.255 | | |All Hosts on the Network: Specifies a global broadcast to all hosts on the directly connected network. Note that there is no address that would imply sending to all hosts everywhere on the global Internet, since this would be very inefficient and costly. |

The missing combination from this table is that of the network ID being all ones and the host ID normal. Semantically, this would refer to “all hosts of a specific ID on all networks,” which doesn’t really mean anything useful in practice, so it’s not used. Note also that, in theory, a special address where the network ID is all zeros and the host ID is all ones would have the same meaning as the all-ones limited broadcast address. The latter is used instead, however, because it is more general, not requiring knowledge of where the division is between the network ID and the host ID.

IP Reserved, Private, and Loopback Addresses

Private address blocks were created to allow private IP Internets to be created using addresses that were guaranteed not to conflict with public IP addresses. They are commonly used in internetworks that aren’t connected to the global Internet; devices using them can also access the global Internet by using NAT.

Loopback Addresses

Portions of the IP address space are set aside for reserved, private, and loopback addresses.

IP Multicast Addressing

IP Multicast Address Ranges and Uses

|Range Start Address |Range End Address |Description | |:— |:— |:— | |224.0.0.0 |224.0.0.255 |Reserved for special well-known multicast addresses | |224.0.1.0 |238.255.255.255 |Globally scoped (Internetwide) multicast addresses. | |239.0.0.0 |239.255.255.255 |Administratively scoped (local) multicast addresses |

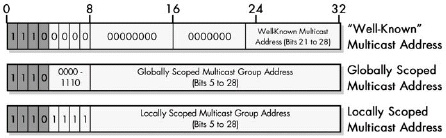

Figure 17-4. IP Multicast address ranges and uses All multicast addresses begin with 1110. The well-known group has zeros for the first 20 bits of the multicast group address, with 8 bits available to define 255 special multicast addresses. Multicast addresses starting with 1110 1111 are locally scoped; all other addresses are globally scoped (this includes addresses starting with 1110 0000 other than the 255 well-known addresses).

Figure 17-4. IP Multicast address ranges and uses All multicast addresses begin with 1110. The well-known group has zeros for the first 20 bits of the multicast group address, with 8 bits available to define 255 special multicast addresses. Multicast addresses starting with 1110 1111 are locally scoped; all other addresses are globally scoped (this includes addresses starting with 1110 0000 other than the 255 well-known addresses).

Well-Known Multicast Addresses

|Range Start Address |Description | |:— |:— | |224.0.0.0 |Reserved; not used | |224.0.0.1 |All devices on the subnet | |224.0.0.2 |All routers on the subnet | |224.0.0.3 |Reserved | |224.0.0.4 |All routers using DVMRP | |224.0.0.5 |All routers using OSPF | |224.0.0.6 |Designated routers using OSPF | |224.0.0.9 |Designated routers using RIP-2 | |224.0.0.11 |Mobile agents (for Mobile IP) | |224.0.0.12 |DHCP server/relay agent |

Problems with Classful IP Addressing

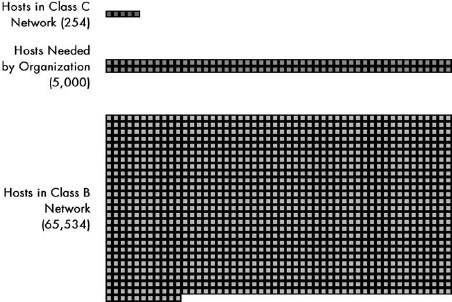

Figure 17-5. The main problem with classful addressing In this scale diagram, each square represents 50 available addresses. Since a Class C address has only 254 addresses, and a Class B contains 65,534 addresses, an organization with 5,000 hosts is caught in the middle. It can only choose to either waste 90 percent of a Class B address or use 20 different Class C networks.

Figure 17-5. The main problem with classful addressing In this scale diagram, each square represents 50 available addresses. Since a Class C address has only 254 addresses, and a Class B contains 65,534 addresses, an organization with 5,000 hosts is caught in the middle. It can only choose to either waste 90 percent of a Class B address or use 20 different Class C networks.

Chapter 18. IP SUBNET ADDRESSING (SUBNETTING) CONCEPTS

Subnet addressing adds an additional hierarchical level to how IP addresses are interpreted by dividing an organization’s IP network into subnets. This allows each organization to structure its address space to match its internal physical networks, rather than being forced to treat them a flat block. This solves a number of problems with the original classful addressing scheme, but requires changes to how addressing and routing work, as well as modifications to several TCP/IP protocols.

IP Subnetting: Three-Level Hierarchical IP Subnet Addressing

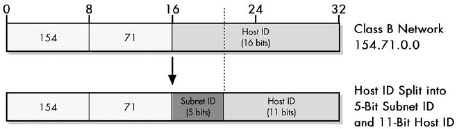

A classful network is subnetted by dividing its host ID portion, leaving some of the bits for the host ID while allocating others to a new subnet ID. These bits are then used to identify individual subnets within the network, into which hosts are assigned.

Figure 18-1. Subnetting Class B network We begin with the Class B network 154.71.0.0, which has 16 bits in its host ID block. We then subnet this network by dividing the host ID into a subnet ID and host ID. In this case, 5 bits have been allocated to the subnet ID, leaving 11 bits for the host ID.

Figure 18-1. Subnetting Class B network We begin with the Class B network 154.71.0.0, which has 16 bits in its host ID block. We then subnet this network by dividing the host ID into a subnet ID and host ID. In this case, 5 bits have been allocated to the subnet ID, leaving 11 bits for the host ID.

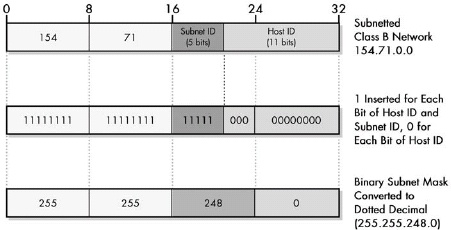

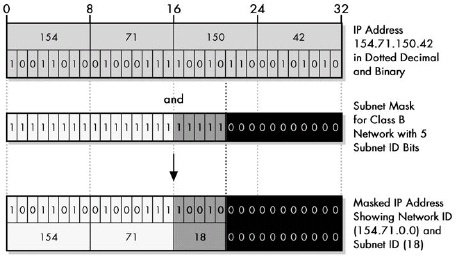

IP Subnet Masks, Notation, and Subnet Calculations

The subnet mask is a 32-bit binary number that accompanies an IP address. It is created so that it has a one bit for each corresponding bit of the IP address that is part of its network ID or subnet ID, and a zero for each bit of the IP address’s host ID. The mask thus tells TCP/IP devices which bits in that IP address belong to the network ID and subnet ID, and which are part of the host ID.

To use a subnet mask, a device performs a boolean AND operation between each bit of the subnet mask and each corresponding bit of an IP address. The resulting 32-bit number contains only the network ID and subnet ID of the address, with the host ID cleared to zero.

Subnet Mask Notation

Figure 18-2. Determining the subnet mask of a subnetted network The Class B network from Figure 18-1 is shown at the top, with 5 bits assigned to the subnet ID and 11 bits left for the host ID. To create the subnet mask, you fill in a 32-bit number with 1 for each network ID and subnet ID bit, and 0 for each host ID bit. You can then convert this to dotted decimal.

Figure 18-2. Determining the subnet mask of a subnetted network The Class B network from Figure 18-1 is shown at the top, with 5 bits assigned to the subnet ID and 11 bits left for the host ID. To create the subnet mask, you fill in a 32-bit number with 1 for each network ID and subnet ID bit, and 0 for each host ID bit. You can then convert this to dotted decimal.

Applying the Subnet Mask: An Example

Figure 18-3. Determining the subnet ID of an IP address through subnet masking Subnet masking involves performing a boolean AND between each corresponding bit in the subnet mask and the IP address. The subnet mask can be likened to a physical mask; each 1 in it lets the corresponding bit of the IP address show through, while each 0 blocks the corresponding IP address bit. In this way the host ID bits of the address are stripped so the device can determine the subnet to which the address belongs.

Figure 18-3. Determining the subnet ID of an IP address through subnet masking Subnet masking involves performing a boolean AND between each corresponding bit in the subnet mask and the IP address. The subnet mask can be likened to a physical mask; each 1 in it lets the corresponding bit of the IP address show through, while each 0 blocks the corresponding IP address bit. In this way the host ID bits of the address are stripped so the device can determine the subnet to which the address belongs.

The subnet mask is often expressed in dotted decimal notation for convenience, but is used by computers as a binary number and usually must be expressed in binary to understand how the mask works and the number of subnet ID bits it represents.

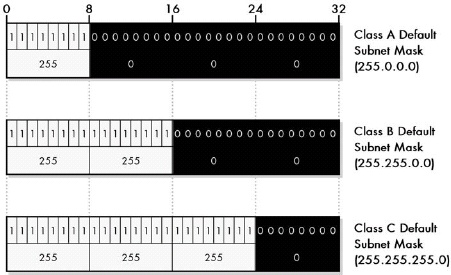

IP Default Subnet Masks for Address Classes A, B, and C

Figure 18-4. Default subnet masks for Class A, Class B, and Class C networks

Figure 18-4. Default subnet masks for Class A, Class B, and Class C networks

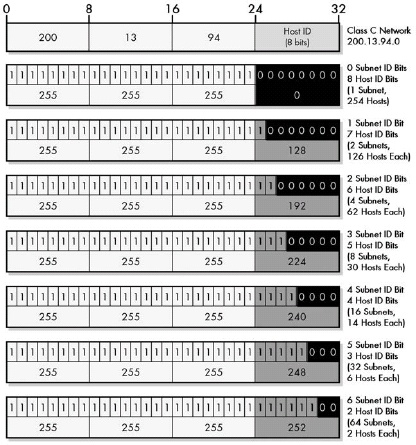

Each of the three IP unicast and broadcast address classes, A, B, and C, has a default subnet mask defined that has a one for each bit of the class’s network ID, a zero for each bit of its host ID, and no subnet ID bits. The three default subnet masks are 255.0.0.0 for Class A, 255.255.0.0 for Class B, and 255.255.255.0 for Class C.

IP Custom Subnet Masks

The fundamental trade-off in subnetting is that each addition of a bit to the subnet ID (and thus, subtraction of that bit from the host ID) doubles the number of subnets, and approximately halves the number of hosts in each subnet. Each subtraction of a bit from the subnet ID (and addition of that bit to the host ID) does the opposite.

Figure 18-5. Custom subnet masks for Class C networks Since there are host ID bits in a Class C network address, there are six different ways that the network can be subnetted. Each corresponds to a different custom subnet mask, which is created by changing the allocated subnet ID bits from zero to one.

Figure 18-5. Custom subnet masks for Class C networks Since there are host ID bits in a Class C network address, there are six different ways that the network can be subnetted. Each corresponds to a different custom subnet mask, which is created by changing the allocated subnet ID bits from zero to one.

The number of hosts allowed in each subnet is the binary power of the number of host ID bits remaining after subnetting, less two. The reduction by two occurs because the all-zeros and all-ones host IDs within each subnet are reserved for two special meaning addresses: to refer to the subnetwork itself and to refer to its local broadcast address. In some implementations, the number of subnets is also reduced by two because the all-zeros and all-ones subnet IDs were originally not allowed to be used.

IP Subnet Identifiers, Subnet Addresses, and Host Addresses

The subnet identifier of a subnet is just its subnet ID. The subnet address of a subnet is determined by substituting its subnet ID into the subnet bits of the overall network address.

Chapter 21. INTERNET PROTOCOL DATAGRAM ENCAPSULATION AND FORMATTING

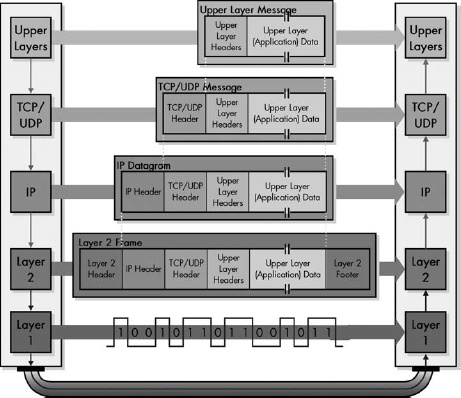

Figure 21-1. IP datagram encapsulation The upper-layer message is packaged into a TCP or UDP message, which becomes the payload of an IP datagram. The IP datagram is then passed down to layer 2, where it is encapsulated in a LAN, WAN, or WLAN frame. It is then converted to bits and transmitted at the physical layer.

Figure 21-1. IP datagram encapsulation The upper-layer message is packaged into a TCP or UDP message, which becomes the payload of an IP datagram. The IP datagram is then passed down to layer 2, where it is encapsulated in a LAN, WAN, or WLAN frame. It is then converted to bits and transmitted at the physical layer.

IP Datagram General Format

Internet Protocol Version 4 (IPv4) Datagram Format

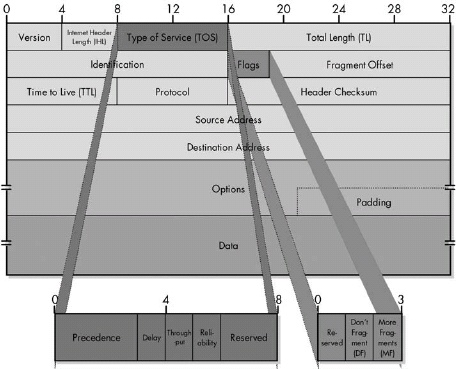

|Field Name |Size (Bytes) |Description | |:— |:— |:— | |Version |1/2 (4 bits) |Identifies the version of IP used to generate the datagram. For IPv4, this is the number 4. This field ensures compatibility between devices that may be running different versions of IP. In general, a device running an older version of IP will reject datagrams created by newer implementations, under the assumption that the older version may not be able to interpret the newer datagram correctly. | |IHL |1/2 (4 bits) |Specifies the length of the IP header, in 32-bit words. This includes the length of any options fields and padding. The normal value of this field when no options are used is 5 (5 32-bit words = 5*4 = 20 bytes). Contrast this with the longer Total Length field in this table. | |TOS |1 |A field designed to carry information to provide quality-of-service features, such as prioritized delivery for IP datagrams. This has not been as widely used as originally defined, and its meaning has been redefined for use by a technique called Differentiated Services (DS), as discussed in the “IP Datagram Type of Service (TOS) Field” section of this chapter. | |TL |2 |Specifies the total length of the IP datagram, in bytes. Since this field is 16 bits wide, the maximum length of an IP datagram is 65,535 bytes, though most are much smaller. | |Identification |2 |This field contains a 16-bit value that is common to each of the fragments belonging to a particular message; for datagrams originally sent unfragmented, it is still filled in so it can be used if the datagram must be fragmented by a router during delivery. The recipient uses this field to reassemble messages without accidentally mixing fragments from different messages. This is needed because fragments may arrive from multiple messages mixed together, since IP datagrams can be received out of order from any device. (See the discussion of IP message fragmentation in Chapter 22.) | |Flags |3/8 (3 bits) |Three control flags, two of which are used to manage fragmentation (as described in the topic on fragmentation), and one that is reserved. | |Fragment Offset |1 5/8 (13 bits) |When fragmentation of a message occurs, this field specifies the offset, or position, in the message where the data in this fragment goes in units of eight bytes (64 bits). The first fragment has an offset of 0. (See the discussion of fragmentation in Chapter 27 for a description of how the field is used.) | |TTL |1 |This specifies how long the datagram is allowed to live on the network, in router hops. Each router decrements the value of the TTL field (reduces it by one) prior to transmitting it. If the TTL field drops to zero, the datagram is assumed to have taken too long a route and is discarded. (See the “IP Datagram Time to Live (TTL) Field” section later in this chapter for more information.) | |Protocol |1 |Identifies the higher-layer protocol (generally either a transport layer protocol or encapsulated network layer protocol) carried in the datagram. | |Header Checksum |2 |A checksum is computed over the header to provide basic protection against corruption in transmission. This is not the more complex cyclic redundancy check (CRC) code that’s typically used by data link layer technologies such as Ethernet; it’s just a 16-bit checksum. It is calculated by dividing the header bytes into words (a word is two bytes) and then adding them together. Only the header is checksummed; not the data. At each hop, the device receiving the datagram does the same checksum calculation, and if there is a mismatch, it discards the datagram as damaged. | |Source Address |4 |This is the 32-bit IP address of the originator of the datagram. Note that even though intermediate devices such as routers may handle the datagram, they do not normally put their address into this field—the address is always that of the device that originally sent the datagram. | |Destination Address |4 |This is the 32-bit IP address of the intended recipient of the datagram. Again, even though devices such as routers may be the intermediate targets of the datagram, this field is always used to specify the ultimate destination. | |Options |Variable |One or more of several types of options may be included after the standard headers in certain IP datagrams, as discussed later in this chapter, in the “IP Datagram Options and Option Format” section. | |Padding |Variable |If one or more options are included, and the number of bits used for them is not a multiple of 32, enough 0 bits are added to pad out the header to a multiple of 32 bits (four bytes). | |Data |Variable |This is the data that will be transmitted in the datagram. It is either an entire higher-layer message or a fragment of one. |

IPv4 Flags Subfields

|Value (Hexadecimal) |Value (Decimal) |Protocol | |:— |:— |:— | |00 |0 |Reserved | |01 |1 |ICMP | |02 |2 |IGMP | |03 |3 |GGP | |04 |4 |IP-in-IP Encapsulation | |06 |6 |TCP | |08 |8 |EGP | |11 |17 |UDP | |32 |50 |Encapsulating Security Payload (ESP) Extension Header | |33 |51 |Authentication Header (AH) Extension Header |

IPv4 datagram format This diagram shows the all-important IPv4 datagram format. The first 20 bytes are the fixed IP header, followed by an optional Options section, and a variable-length Data area. Note that the Type of Service field is shown as originally defined in the IPv4 standard.

IPv4 datagram format This diagram shows the all-important IPv4 datagram format. The first 20 bytes are the fixed IP header, followed by an optional Options section, and a variable-length Data area. Note that the Type of Service field is shown as originally defined in the IPv4 standard.

Original Definition of IPv4 Type of Service (TOS) Field

|Subfield Name |Size (Bytes) |Description | |:— |:— |:— | |Precedence |3/8 (3 bits) |A field indicating the priority of the datagram. There were eight defined values, from lowest to highest priority:<ul><li>000: Routine<li/><li>001: Priority</li><li>010: Immediate</li><li>011: Flash</li><li>100: Flash Override</li><li>101: CRITIC/ECP</li><li>110: Internetwork Control</li><li>111: Network Control</li></ul> | |D |1/8 (1 bit) |Set to 0 to request normal delay in delivery; set to 1 if a low delay delivery is requested. | |T |1/8 (1 bit) |Set to 0 to request normal delivery throughput; set to 1 if higher throughput delivery is requested. | |R |1/8 (1 bit) |Set to 0 to request normal reliability in delivery; set to 1 if higher reliability delivery is requested. | |Reserved |2/8 (2 bits) |Not used. |

IP Datagram Options and Option Format

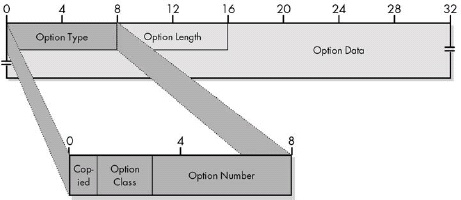

IPv4 Option Format

|Subfield Name |Size (Bytes) |Description | |:— |:— |:— | |Option Type |1 |The Option Type subfield is divided into three subsubfields. | |Option Length |0 or 1 |For variable-length options, indicates the size of the entire option, including all three subfields shown here, in bytes. | |Option Data |0 or variable |For variable-length options, contains data to be sent as part of the option. |

IPv4 Options: Option Type Subfields

|Sub-Subfield Name |Size (Bytes) |Description | |:— |:— |:— | |Copied Flag |1/8 (1 bit) |This bit is set to 1 if the option is intended to be copied into all fragments when a datagram is fragmented; it is cleared to 0 if the option should not be copied into fragments. | |Option Class |2/8 (2 bits) |Specifies one of four potential values that indicate the general category into which the option belongs. In fact, only two of the values are used: 0 is for Control options, and 2 for Debugging and Measurement. | |Option Number |5/8 (5 bits) |Specifies the kind of option. 32 different values can be specified for each of the two option classes. Of these, a few are more commonly employed. |

Figure 21-3. IPv4 Options field format This diagram shows the full field format for an IPv4 option. Note that a few simple options may consist of only the Option Type subfield, with the Option Length and Option Data subfields omitted.

Figure 21-3. IPv4 Options field format This diagram shows the full field format for an IPv4 option. Note that a few simple options may consist of only the Option Type subfield, with the Option Length and Option Data subfields omitted.

Common IPv4 Options

|Option Class |Option Number |Length (Bytes) |Option Name |Description | |0 |0 |1 |End of Options List |An option containing just a single zero byte, used to mark the end of a list of options. | |0 |1 |1 |No Operation |A “dummy option” used as internal padding to align certain options on a 32-bit boundary when required. | |0 |2 |11 |Security |An option provided for the military to indicate the security classification of IP datagrams. | |0 |3 |Variable |Loose Source Route |One of two options for source routing of IP datagrams. | |0 |7 |Variable |Record Route |Allows the route used by a datagram to be recorded within the header for the datagram itself. If a source device sends a datagram with this option in it, each router that handles the datagram adds its IP address to this option. The recipient can then extract the list of IP addresses to see the route taken by the datagram. Note that the length of this option is set by the originating device. It cannot be enlarged as the datagram is routed, and if it fills up before it arrives at its destination, only a partial route will be recorded. | |0 |9 |Variable |Strict Source Route |One of two options for source routing of IP datagrams. | |2 |4 |Variable |Timestamp |Works similar to the Record Route option, but each device puts in a timestamp, so the recipient can see how long it took for the datagram to travel between routers. As with the Record Route option, the length of this option is set by the originating device and cannot be enlarged by intermediate devices. | |2 |18 |12 |Traceroute |Used in the enhanced implementation of the traceroute utility, as described in RFC 1393. Also see Chapter 33, which discusses ICMP traceroute messages. |

Each IPv4 datagram has a 20-byte mandatory header and may also include one or more options. Each option has its own field format, and most are variable in size.

Chapter 22. IP DATAGRAM SIZE, FRAGMENTATION, AND REASSEMBLY

IP Datagram Size, MTU, and Fragmentation Overview

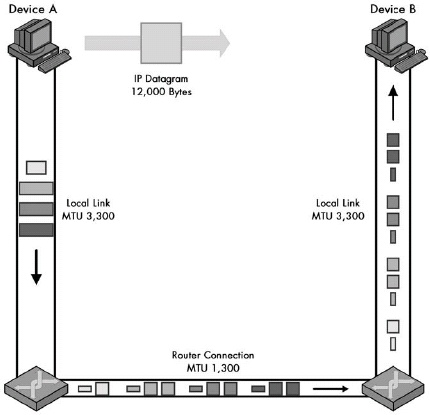

The size of the largest IP datagram that can be transmitted over a physical network is called that network’s maximum transmission unit (MTU). If a datagram is passed from a network with a high MTU to one with a low MTU, it must be fragmented to fit the other network’s smaller MTU.

Figure 22-1. IP maximum transmission unit (MTU) and fragmentation In this simple example, Device A is sending to Device B over a small internetwork consisting of one router and two physical links. The link from Device A to the router has an MTU of 3,300 bytes, but from the router to Device B, it is only 1,300 bytes. Thus, any IP datagrams larger than 1,300 bytes will need to be fragmented.

Figure 22-1. IP maximum transmission unit (MTU) and fragmentation In this simple example, Device A is sending to Device B over a small internetwork consisting of one router and two physical links. The link from Device A to the router has an MTU of 3,300 bytes, but from the router to Device B, it is only 1,300 bytes. Thus, any IP datagrams larger than 1,300 bytes will need to be fragmented.

Figure 22-2. IPv4 datagram fragmentation. This example illustrates a two-step fragmentation of a large IP datagram. The boxes represent datagrams or datagram fragments and are shown to scale. The original datagram is 12,000 bytes, represented by the large, gray box. To transmit this data over the first local link, Device A splits it into four fragments, shown on the left. The first router must fragment each of these into smaller fragments to send them over the 1,300-byte MTU link, as shown on the bottom. Note that the second router does not reassemble the 1,300-byte fragments, even though its link to Device B has an MTU of 3,300 bytes. (The “IP Fragmentation Process” section later in this chapter describes the process by which the fragments in this example are created.)

Figure 22-2. IPv4 datagram fragmentation. This example illustrates a two-step fragmentation of a large IP datagram. The boxes represent datagrams or datagram fragments and are shown to scale. The original datagram is 12,000 bytes, represented by the large, gray box. To transmit this data over the first local link, Device A splits it into four fragments, shown on the left. The first router must fragment each of these into smaller fragments to send them over the 1,300-byte MTU link, as shown on the bottom. Note that the second router does not reassemble the 1,300-byte fragments, even though its link to Device B has an MTU of 3,300 bytes. (The “IP Fragmentation Process” section later in this chapter describes the process by which the fragments in this example are created.)

IP Message Fragmentation Process

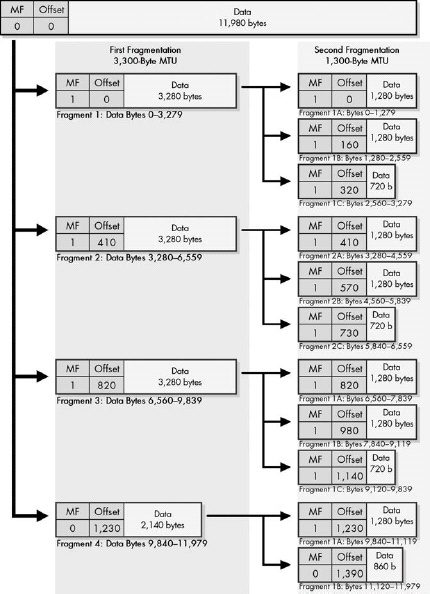

Figure 22-3. IPv4 datagram fragmentation process In this diagram, the MF and Fragment Offset fields of each fragment are shown for reference. The Data fields are shown to scale (the length of each is proportional to the number of bytes in the fragment).

Figure 22-3. IPv4 datagram fragmentation process In this diagram, the MF and Fragment Offset fields of each fragment are shown for reference. The Data fields are shown to scale (the length of each is proportional to the number of bytes in the fragment).

When an MTU requirement forces a datagram to be fragmented, it is split into several smaller IP datagrams, each containing part of the original. The header of the original datagram is changed into the header of the first fragment, and new headers are created for the other fragments. Each is set to the same Identification value to mark them as part of the same original datagram. The Fragment Offset of each is set to the location where the fragment belongs in the original. The More Fragments field is set to 1 for all fragments but the last, to let the recipient know when it has received all the fragments.

IP Message Reassembly

Reassembly is complete when the entire buffer has been filled and the fragment with the More Fragments bit set to 0 is received, indicating that it is the last fragment of the datagram. The reassembled datagram is then processed in the same way as a normal, unfragmented datagram. On the other hand, if the timer for the reassembly expires with any of the fragments missing, the message cannot be reconstructed. The fragments are discarded, and an ICMP Time Exceeded message is generated. Since IP is unreliable, it relies on higher-layer protocols such as the Transmission Control Protocol (TCP) to determine that the message was not properly received and then retransmit it.

Chapter 23. IP ROUTING AND MULTICASTING

IP Datagram Delivery

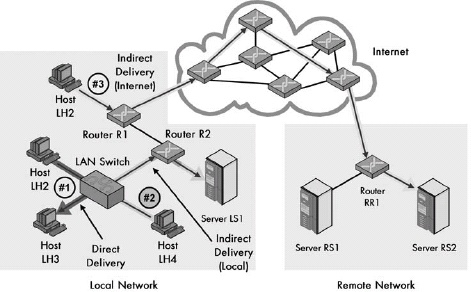

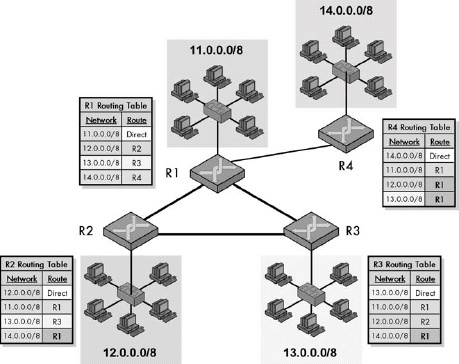

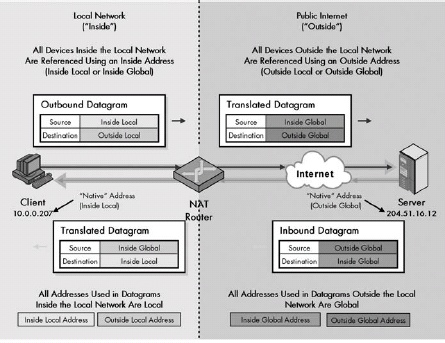

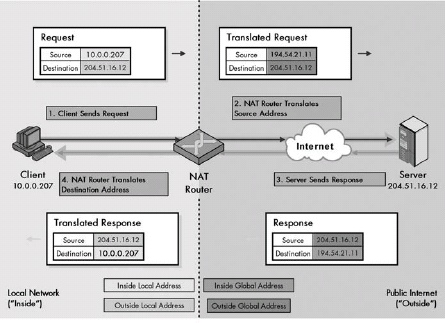

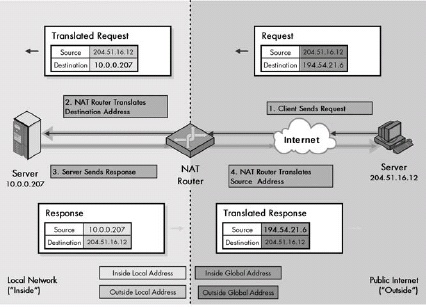

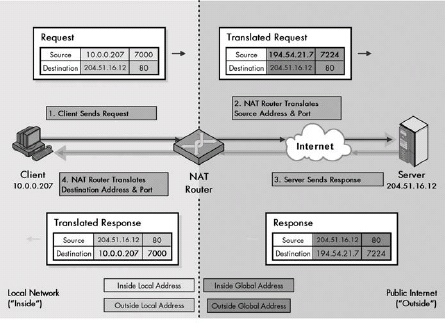

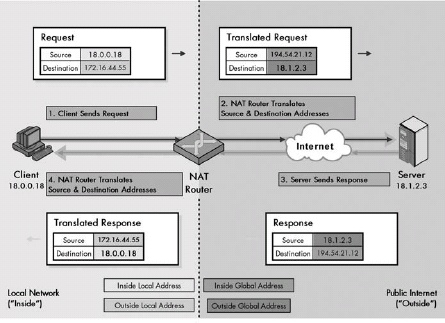

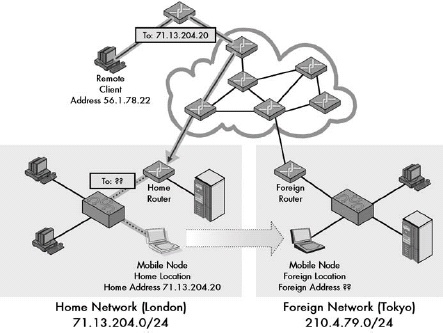

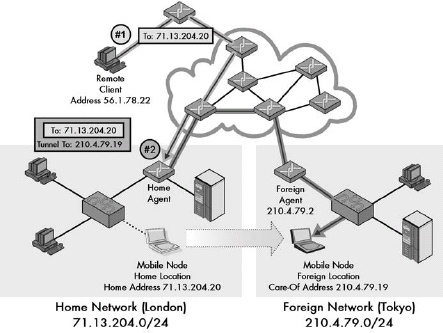

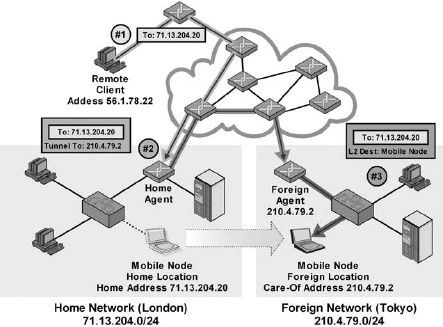

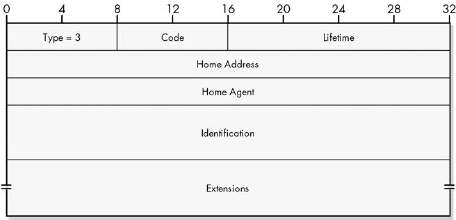

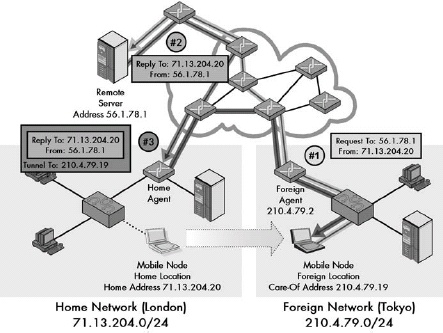

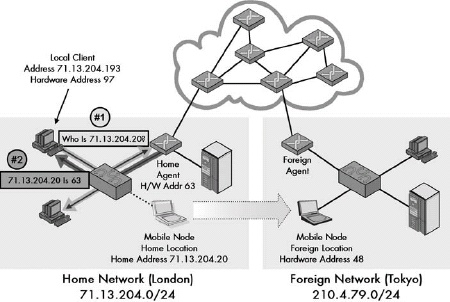

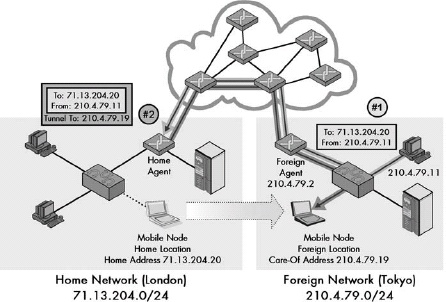

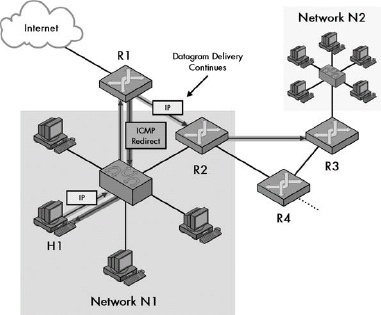

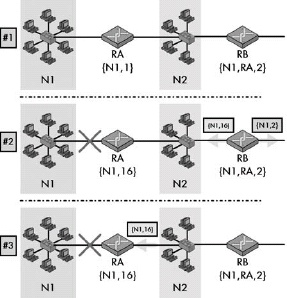

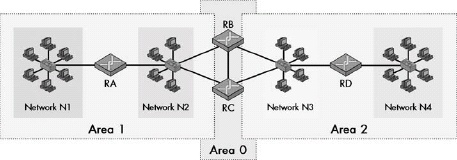

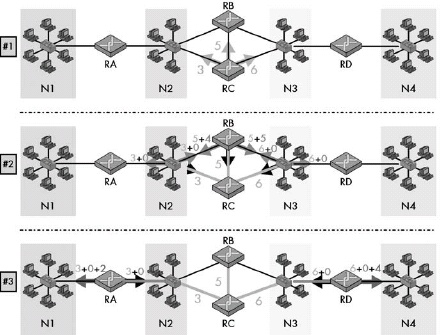

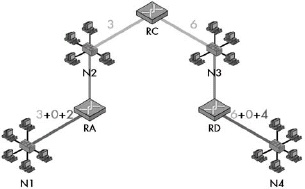

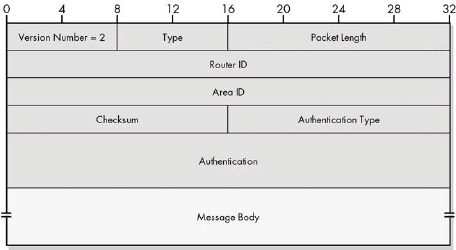

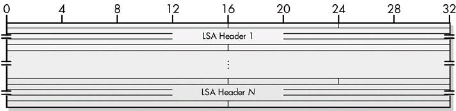

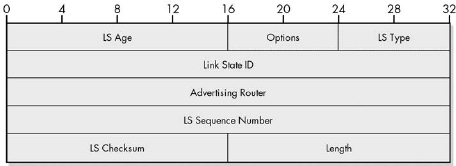

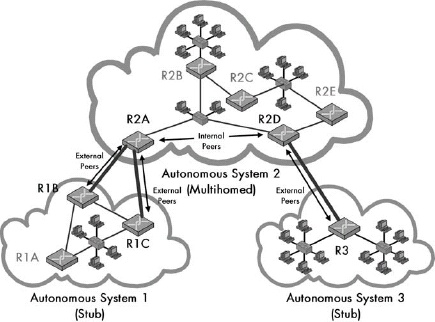

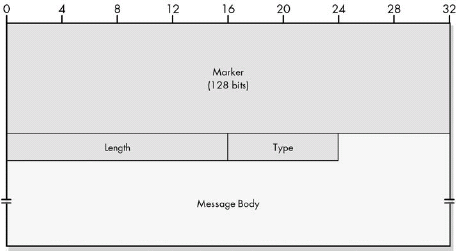

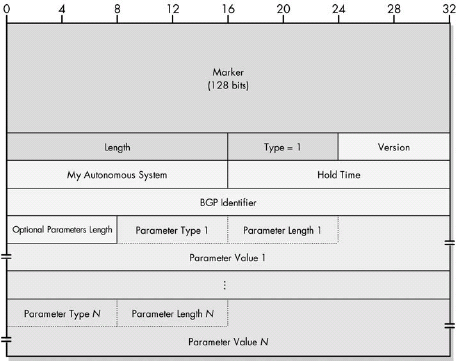

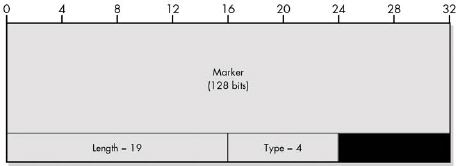

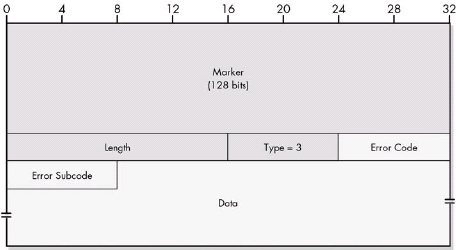

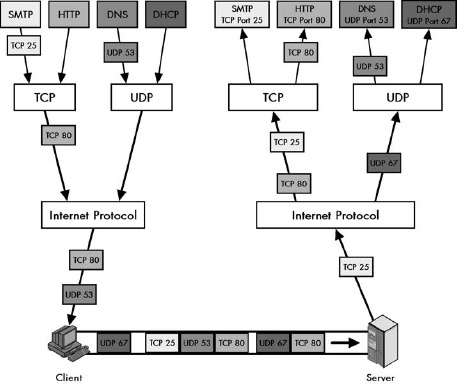

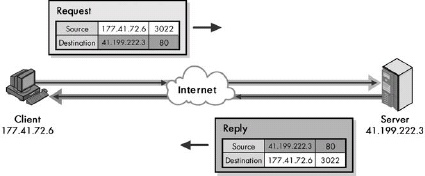

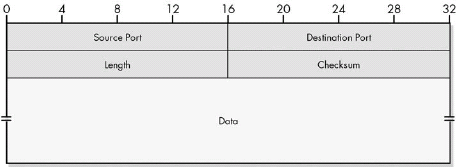

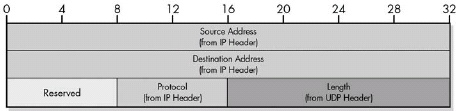

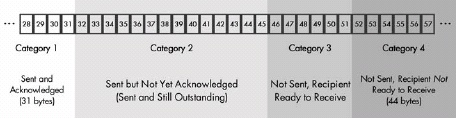

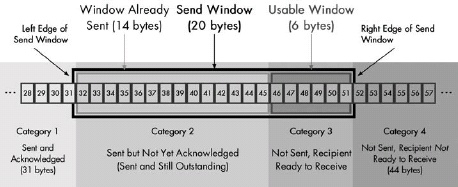

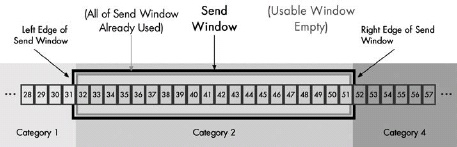

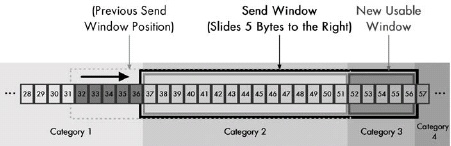

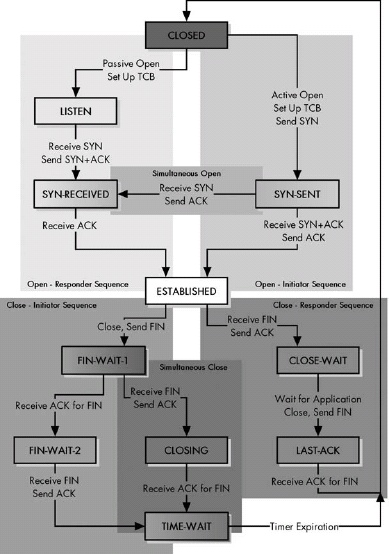

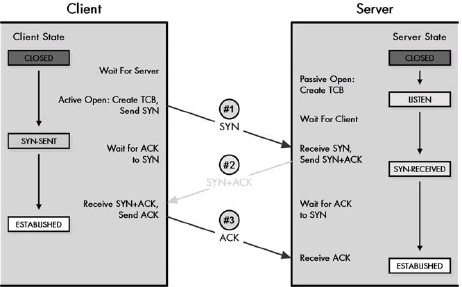

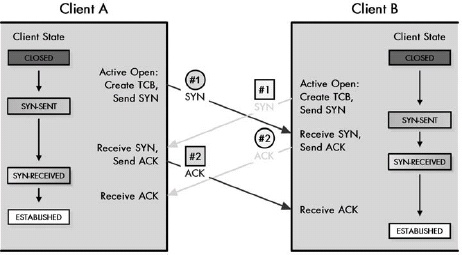

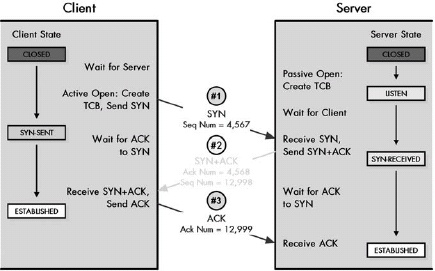

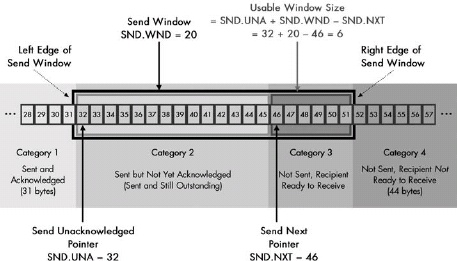

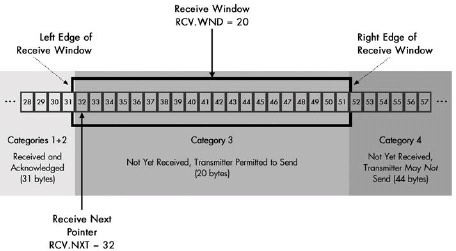

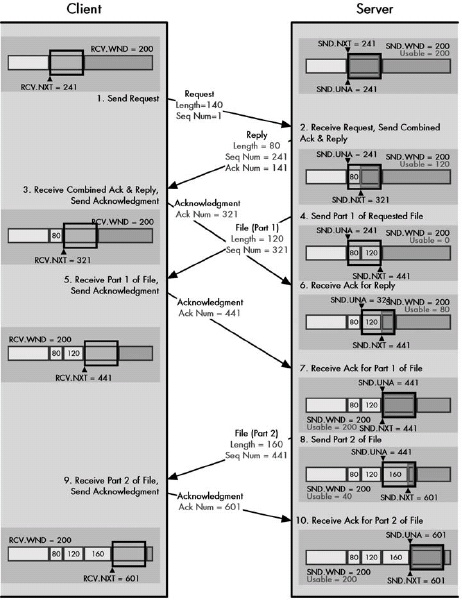

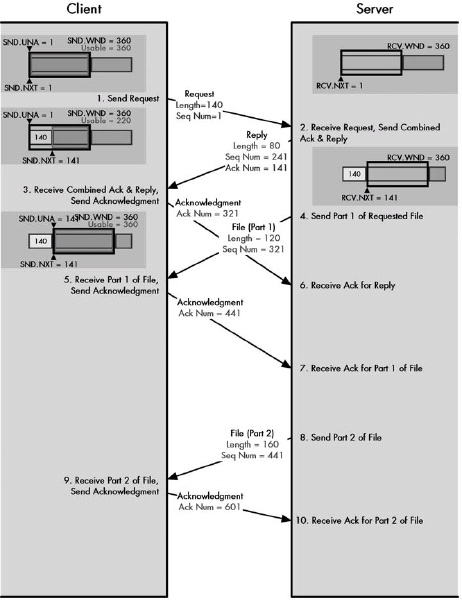

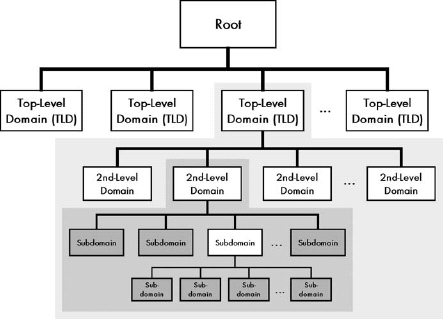

Figure 23-1. Direct and indirect (routed) delivery of IP datagrams This diagram shows three examples of IP datagram delivery. The first transmission (#1, dark arrow) shows a direct delivery between two devices on the local network. The second (#2, light arrow) shows indirect delivery within the local network, between a client and server separated by a router. The third (#3, medium arrow) shows a more distant indirect delivery, between a client on the local network and a server across the Internet.